Real-Time Object Detection

YOLO-Based Real-Time Object Detection for Autonomous Driving in CARLA

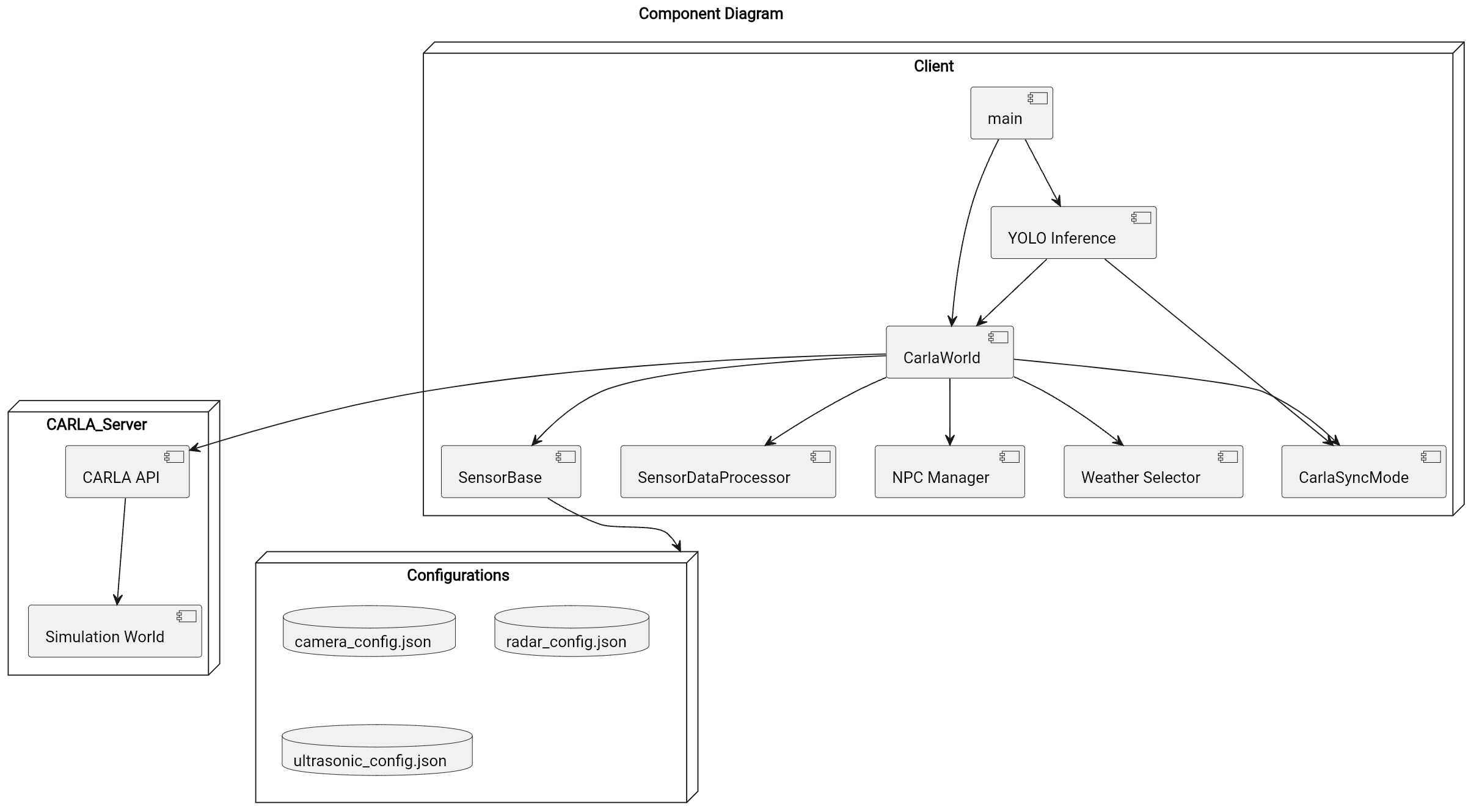

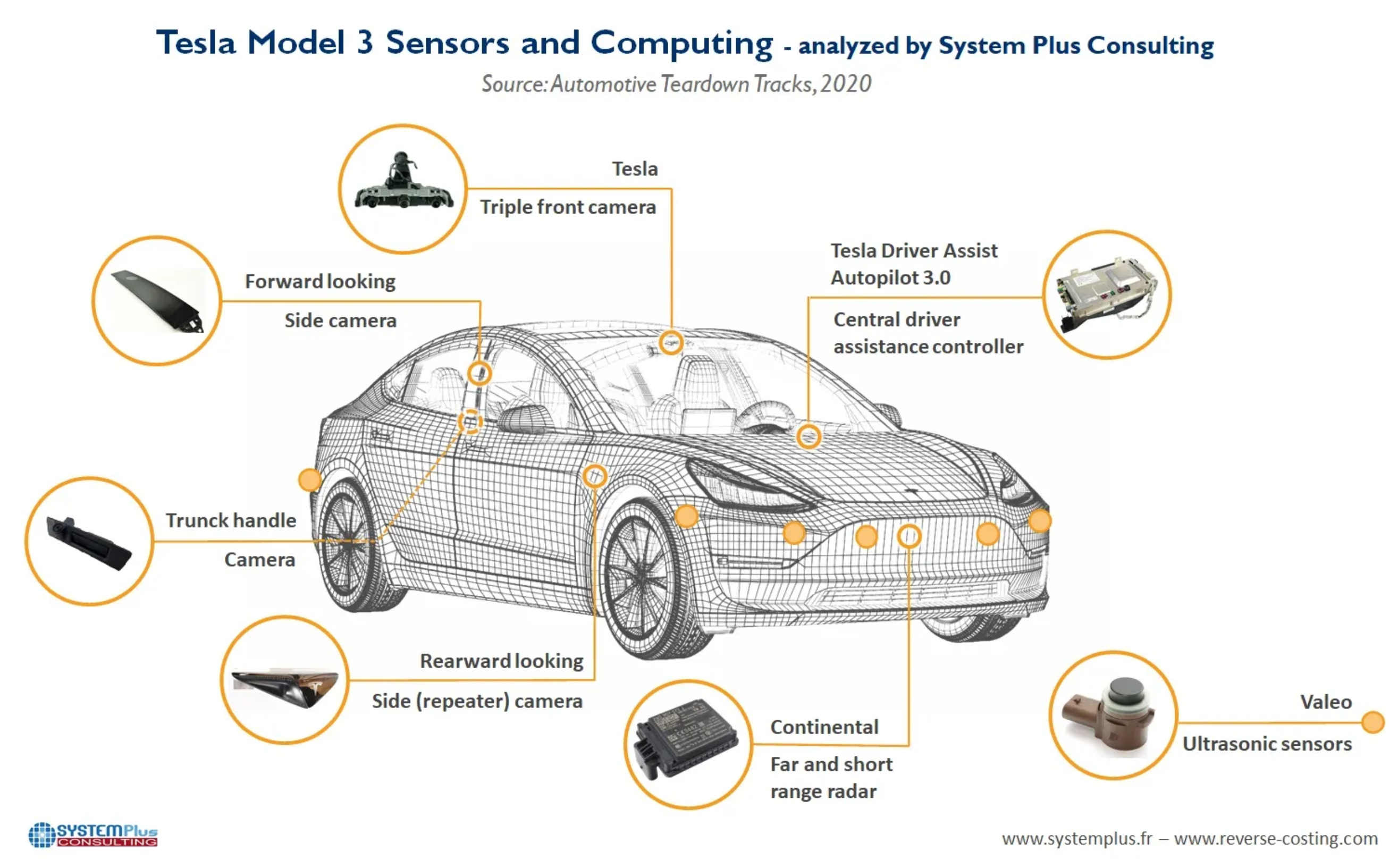

This project explored real-time object detection for autonomous driving using the CARLA Simulator. A simulated Tesla Model 3 was equipped with eight cameras, 12 ultrasonic sensors, and a radar to generate multi-modal synthetic data for training and evaluating state-of-the-art object detection models.

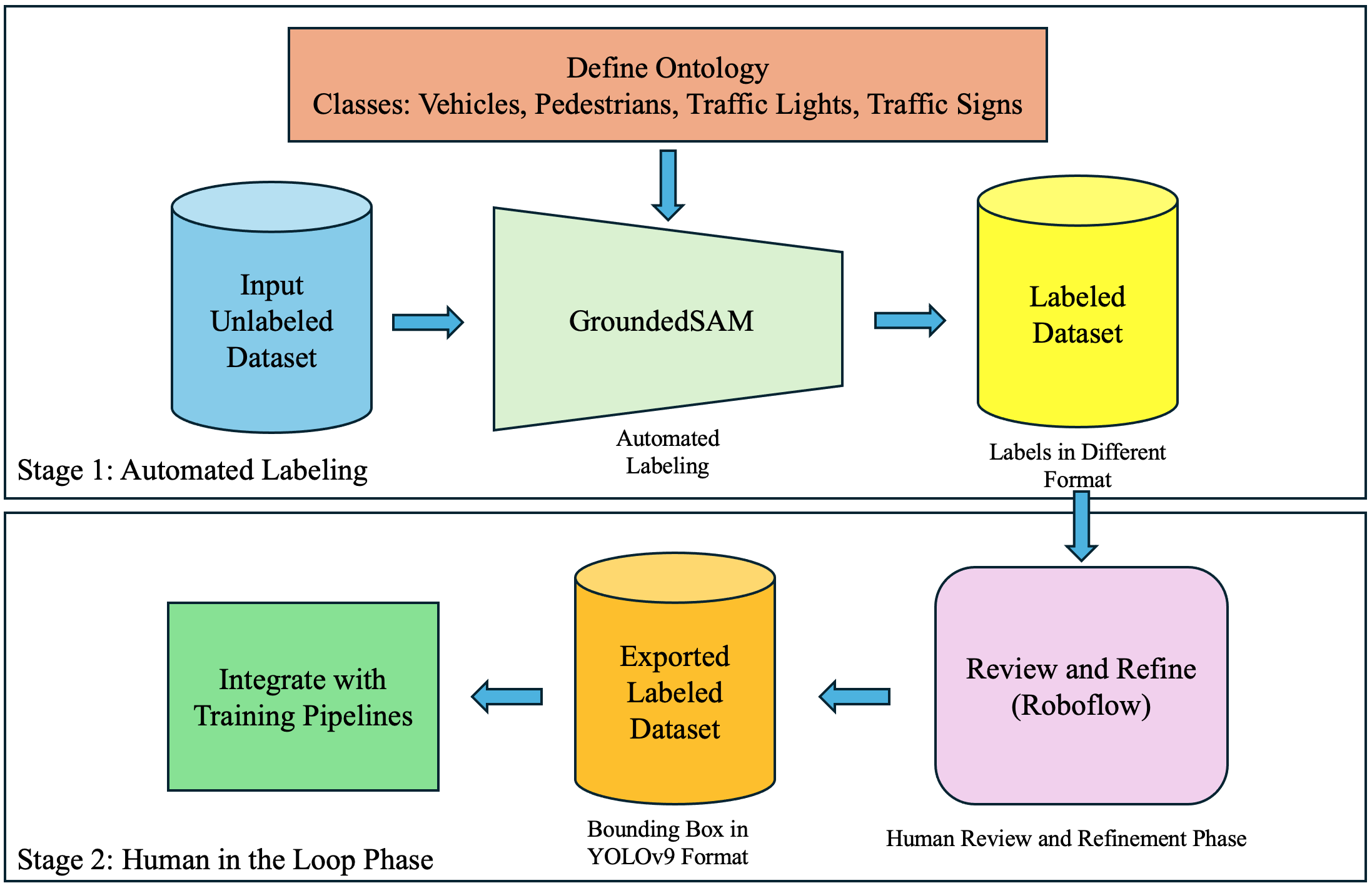

A custom dataset of 6,400 annotated images was created using a two-stage labeling pipeline:

- Automated annotation with the GroundedSAM foundation model.

- Manual refinement with Roboflow to ensure accuracy.

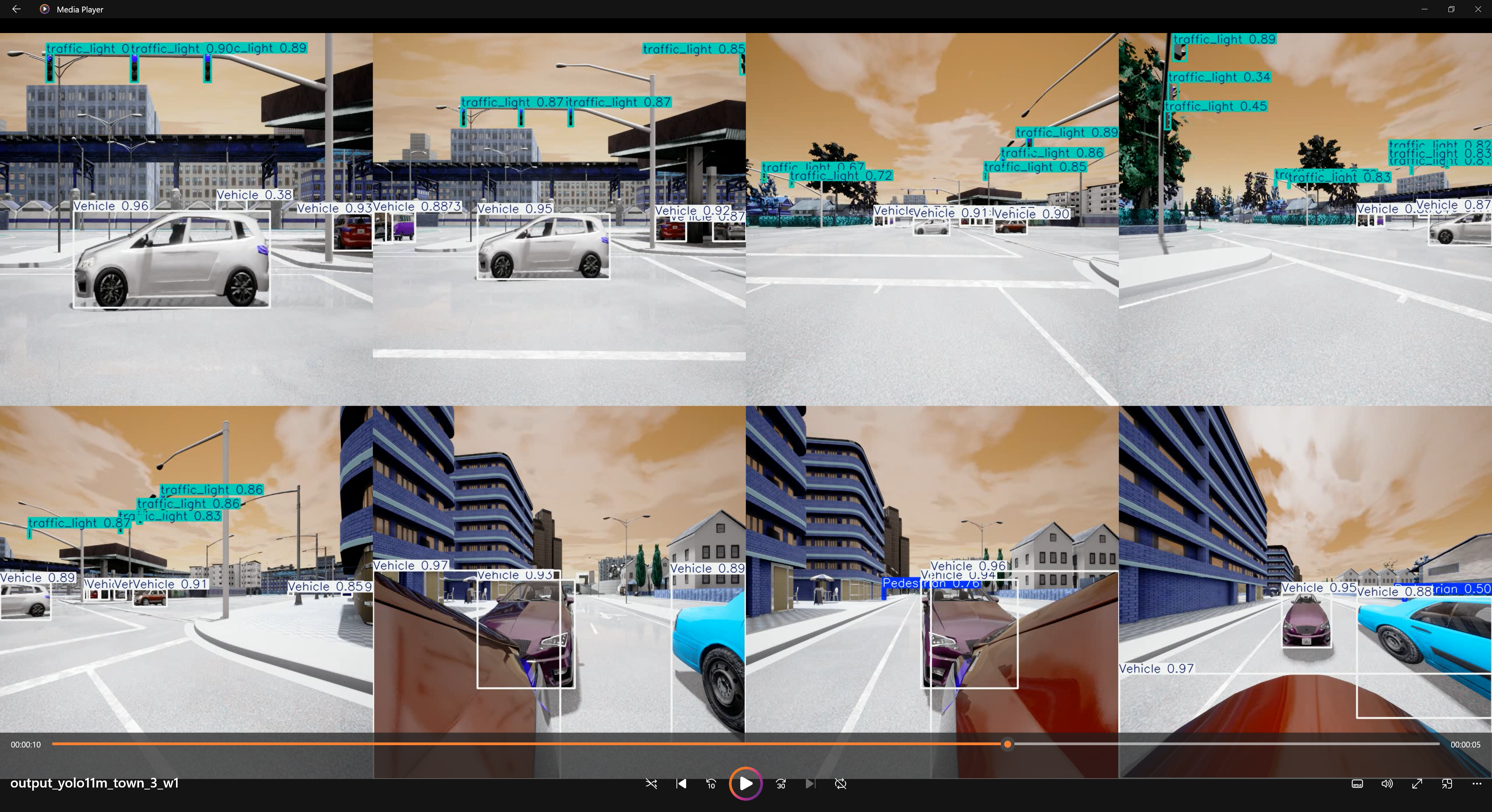

The dataset included vehicles, traffic lights, pedestrians, and traffic signs. Models from YOLOv8, YOLOv9, YOLOv10, and YOLO11 families were trained and benchmarked. Among them, YOLOv8m and YOLO11m achieved the best balance of localization precision and classification accuracy, scoring highest on the mAP@0.5:0.95 metric. The YOLO11m model was integrated into CARLA for real-time inference at 30 FPS.

Key Highlights

- Built a realistic CARLA-based Tesla Model 3 simulation with multi-sensor setup.

- Created a synthetic dataset of 6,400 images using a hybrid GroundedSAM + Roboflow annotation pipeline.

- Trained and evaluated YOLOv8–YOLO11 models across multiple configurations.

- Integrated YOLO11m into CARLA for real-time detection in simulation.

- Demonstrated strengths of simulation-driven research for autonomous driving and sensor fusion.

Visuals